In the past few years, the topics of big data and data science have grown into mainstream prominence across countless industries. No longer are high tech companies in Silicon Valley the sole purveyors of topics like Hadoop, logistic regression, and machine learning. Being familiar with big data technologies is becoming an increasingly necessary requirement for tech jobs everywhere. Unfortunately, getting real, hands-on experience with big data technologies typically means having access to an expensive computer cluster to run your queries. However, the recent single board computer revolution has made true distributed computing accessible for personal use and education for tasks such as these and more.

I have worked in the big data space for eight years. While I have had access to a cluster to crunch petabytes of data for some time, I have never had the opportunity to design and build a cluster of my own. I decided to build a small cluster primarily to become more familiar with the underlying setup and operations of big data software and an underlying cluster. My price goal was to build a four-node cluster for under USD$600. I also wanted build a cluster powerful enough to be reasonably able to process data on the 10s of gigabyte scale in size.

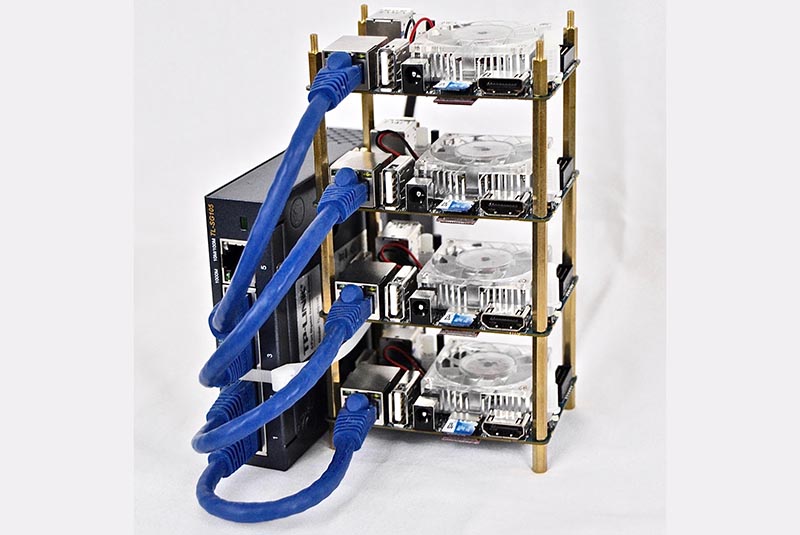

Key elements of consideration when selecting the cluster technology is data storage and I/O, networking performance, CPU cores, and available RAM. Fortunately, Hardkernel makes a single board computer that excels in these spec needs: the ODROID-XU4. With a 2GHz Samsung Exynos 5422 8-core processor, onboard Gigabit ethernet, multiple USB 3.0 ports, 2 GB of RAM, and availability of high performance data storage with both eMMC drives and UHS-1 microSD cards, the XU4 is a formidable single board computer for a relatively low cost.

With the node hardware selected, our first task is to design the cluster topology, or how the nodes will be connected to each other. Several things influence this, most notably the type of distributed computing you expect to do. Distributed computing paradigms can be categorized roughly as either big CPU or big data. For this project, we are focusing on the big data use case, specifically for data analysis. The most common big data paradigm in use today for data analysis is mapreduce, which is implemented famously by both Apache Hadoop and Apache Spark, both very popular data warehousing technologies in use by many of the big tech companies out there.

In most commercial scale MapReduce clusters, the general cluster topology has any number of edge nodes that a user logs into to use the cluster, one or more head nodes which are used by the cluster to coordinate both compute activity and data storage, and any number of slave nodes which are used for compute tasks or data storage or both (see Figure 1). Think of it like dividing a large project between multiple people to improve everyone’s speed: a director issues the project request (the edge node) with several managers coordinating what to do (the head nodes), and employees taking those tasks and combining their work (the slave nodes) into a final solution for the director.

For our XU4 cluster, we are going to combine the concept of an edge node and a head node into one master node, and then link slaves to the master node. This means the master node will be the node users log into to use the cluster and the node that coordinates the slaves. This also implies that the cluster’s node-to-node communication would occur over a private network, while the master node needs to have connection to the outside network. Given that, the XU4’s networking design for a four node cluster would need to resemble the one shown in Figure 2.

This topology requires the master node to be able to connect to two separate networks. However, the XU4 has only one ethernet port. A second network connection will need to be added to the master node with a USB 3 ethernet dongle.

The XU4 offers two storage options: an eMMC drive and a microSD card. Both have their pros and cons. The eMMC drive is extremely fast, while the microSD card cost per gigabyte is very affordable, but slower than the eMMC drive. The good news is that a UHS-1 microSD card’s read and write performance can be on par with spinning hard drives, which are typically used in large commercial clusters. This makes the microSD card a good option for bulk data storage. However, the speed of the eMMC drive is attractive for using as a boot drive from which software is executed. Given that, each node in our cluster will have both an eMMC drive for booting from and a microSD card for bulk data storage. I recommend getting at least a 16GB eMMC drive for the master node, since it will be where you, as a user, will work from, while money can be saved by getting the cheaper 8GB eMMC drives for the slave nodes. For data storage, find some fast 64GB or greater microSD cards for each of the nodes.

The final set of materials necessary for the project include a small Ethernet switch for the cluster’s internal network, a number of 6 inch Ethernet cables, and PCB standoffs to stack the XU4s together. I also picked up a serial UART for the XU4 in case I needed to connect to a device directly to sort out any issues, although I never needed it. One item which I did not purchase that would be nice to have in retrospect was a single power supply that could provide 5V power at 4 amps simultaneously to all the nodes, rather than a messy and inefficient collection of wall adapters plugged into a power strip. That will be a future improvement to the project.

Once all the materials are collected and the cluster is constructed, our first task is to configure the operating system and networking on all nodes. I chose to go with ODROID’s current Ubuntu 15.10 distribution for the XU4. I flashed this OS onto each of the eMMC modules, and then one-by-one booted each device without the additional microSD card (which will be used for later storage after provisioning) and while directly connected to my home network. This allowed me to directly SSH into the device after the first boot. After the device booted, I found the IP address each XU4 grabbed from my home’s DHCP server and logged in. The default user account is “odroid” with a password of “odroid”. After connecting, I installed the ODROID Utility to further configure the OS. This can be done by directly downloading the utility from Github:

$ sudo -s $ wget -O /usr/local/bin/odroid-utility.sh https://raw.githubusercontent.com/ mdrjr/odroid-utility/master/odroid-utility.sh $ chmod +x /usr/local/bin/odroid-utility.sh $ odroid-utility.shThe three tasks the ODROID Utility is used to accomplish is to name the node, disable Xorg, and maximize the partition size of the eMMC drive. I named the master node master, and the other three: slave1, slave2, and slave3.

The master node needs to be configured further to use the USB 3 ethernet dongle as it’s external network. To configure the master node for getting its external internet connection from the network attached to USB dongle, you will need to create a file named “eth1” in the /etc/network/interfaces.d/ directory with the following contents (assuming that network has a DHCP server):

auto eth1 iface eth1 inet dhcpSimilarly, to have the onboard ethernet be used for the internal cluster network, a file named eth0 needs to be created in the same folder indicating a static IP address:

auto eth0 iface eth0 inet static address 10.10.10.1 netmask 255.255.255.0 network 10.10.10.0 broadcast 10.10.10.255A DHCP server needs to be set up on the master node in order to provide an IP address to the slave nodes on the internal network, and the master node will need to provide NAT services between the external and internal networks. Furthermore, all nodes will need their /etc/hosts file edited to allow mnemonic addressing of nodes by their name without needing a DNS service. Detailed instructions for accomplishing these tasks can be found at my blog at http://bit.ly/2aJdAmi.

Once the nodes are configured for the desired networking design, the nodes can be shut down and disconnected from the home networking. The nodes’ on-board Ethernet should be connected to the internal network’s Ethernet switch, and your home network should connect to the master node’s USB 3 Ethernet dongle.

Before restarting each node, format the microSD cards with an ext4 file system, and attach one to each node. Boot up all the devices. You should be able to SSH into the master node, and from there you can SSH into each slave. Your final setup task is to configure the /etc/fstab file on each device such that the microSD card is mounted to a /data mount point. To do this, you need to find the UUID of the microSD card’s volume after mountain it for the first time with the blkid command, then adding a line to the /etc/fstab file that looks like:

UUID=c1f7210a-293a-423e-9bde-1eba3bcc9c34 /data ext4 defaults 0 0Replacing your microSD card’s UUID with the one listed above, which is also detailed on my blog. Once these steps are completed, you will have a fully configured cluster that is ready to have big data software such as Hadoop installed. Installing Hadoop is a fairly involved process, and I will cover that in a future article. For now, we have successfully provisioned an XU4 cluster that can be used for any sort of complex data processing. Further information about this ODROID-XU4 cluster can be found at http://bit.ly/2aJdAmi.

Be the first to comment