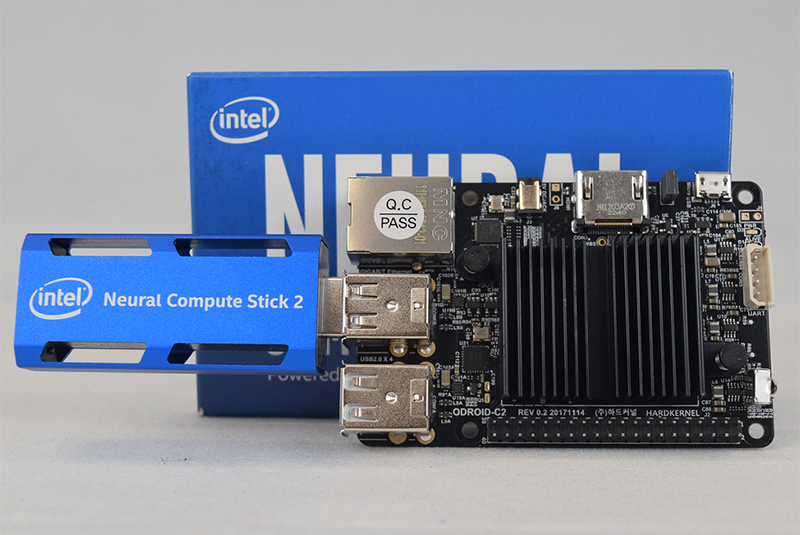

The Intel® Distribution of the OpenVINO™ toolkit and the Intel® Neural Compute Stick 2 (Intel® NCS 2) are the perfect complement for vision applications in low-power development environments. Getting setup on so many different architectures presents opportunities. ARMl platforms such as ARM64 are becoming increasingly common for developers building and porting solutions with low-powered single-board computers (SBCs). These can have widely varying requirements compared to traditional x86 computing environments. While the Intel® Distribution of the OpenVINO™ toolkit provides a binary installation for multiple environments, including the popular Raspberry Pi* SBC, the open-source version of the Intel® OpenVINO™ toolkit offers developers the opportunity to build the toolkit and port application(s) for various environments. HARDKERNEL CO., LTD’s ODROID-C2 is a microcomputer similar to the Raspberry Pi. The ODROID-C2 is an ARM64 platform with a powerful quad-core processor and plenty of RAM (2 GB) for multiple applications. This article will guide you on your journey of setting up an ODROID-C2 with Ubuntu* 16.04 (LTS), building CMake*, OpenCV, and Intel® OpenVINO™ toolkit, setting up your Intel® NCS 2, and running a few samples to make sure everything is ready for you to build and deploy your Intel® OpenVINO™ toolkit applications.

Although, these instructions were written for the ODROID-C2*, the steps should be similar for other ARM* 64 SBCs such as the ODROID-N2 as long as your environment is using a 64-bit operating system. If your device uses a 32-bit operating system supporting at least the ARMv7 instruction set, visit this ARMv7 article: https://intel.ly/2DyJjpt. For general instructions on building and using the open source distribution of the OpenVINO™ toolkit with the Intel® Neural Compute Stick 2 and the original Intel® Movidius™ Neural Compute Stick please take a look at the article at: https://intel.ly/2P9kQga.

Hardware

Make sure that you satisfy the following requirements before beginning. This will ensure that the entire install process goes smoothly:

- ARMv7 SBC such as the Orange pi PC Plus

- AT LEAST an 8GB microSD Card. You may utilize the onboard eMMC module if one is attached, but you will need a microSD card to write the operating system to the board

- Intel® Neural Compute Stick 2

- Ethernet Internet connection or compatible wireless network

- Dedicated DC Power Adapter

- Keyboard

- HDMI Monitor

- HDMI Cable

- USB Storage Device

- Separate Windows*, Ubuntu*, or macOS* computer (like the one you’re using right now) for writing the installer image to device with a compatible microSD card reader

Setting Up Your Build Environment

This guide assumes you are using the root user and does not include sudo in its commands. If you have created another user and are logged in as that user, run these commands as root to install them correctly.

Make sure your device software is up to date:

$ apt update && apt upgrade –ySome of the toolkit’s dependencies do not have prebuilt ARMv7 binaries and need to be built from source – this can increase the build time significantly compared to other platforms. Preparing to build the toolkit requires the following steps:

- Installing build tools

- Installing CMake* from source

- Installing OpenCV from source

- Cloning the toolkit

These steps are outlined below:

Installing Build Tools

Install build-essential:

$ apt install build-essentialThis will install and setup the GNU C and GNU CPlusPlus compilers. If everything completes successfully, move on to install CMake* from source.

Install CMake* from Source

The open-source version of Intel® OpenVINO™ toolkit (and OpenCV, below) use CMake* as their build system. The version of CMake in the package repositories for both Ubuntu 16.04 (LTS) and Ubuntu 18.04 (LTS) is too out of date for our uses and no official binary exists for the platform – as such we must build the tool from source. As of writing, the most recent stable supported version of CMake is 3.14.4. To begin, fetch CMake from the Kitware* GitHub* release page, extract it, and enter the extracted folder:

$ wget https://github.com/Kitware/CMake/releases/download/v3.14.4/cmake-3.14.4.tar.gz $ tar xvzf cmake-3.14.4.tar.gz $ cd ~/cmake-3.14.466Run the bootstrap script to install additional dependencies and begin the build:

$ ./bootstrap $ make –j4 $ make installThe install step is optional, but recommended. Without it, CMake will run from the build directory. The number of jobs the make command uses can be adjusted with the –j flag – it is recommended to set the number of jobs at the number of cores on your platform. You can check the number of cores on your system by using the command grep –c ^processor /proc/cpuinfo. Be aware that setting the number too high can lead to memory overruns and the build will fail. If time permits, it is recommended to run 1 to 2 jobs. CMake is now fully installed.

Install OpenCV from Source

Intel® OpenVINO™ toolkit uses the power of OpenCV to accelerate vision-based inferencing. While the CMake process for Intel® OpenVINO™ toolkit downloads OpenCV, if no version is installed for supported platforms, no specific version exists for ARMv7 platforms. As such, we must build OpenCV from source. OpenCV requires some additional dependencies. Install the following from your package manager (in this case, apt):

- git

- libgtk2.0-dev

- pkg-config

- libavcodec-dev

- libavformat-dev

- libswscale-dev

Clone the repository from OpenCV GitHub* page, prepare the build environment, and build:

$ git clone https://github.com/opencv/opencv.git $ cd opencv && mkdir build && cd build $ cmake –DCMAKE_BUILD_TYPE=Release –DCMAKE_INSTALL_PREFIX=/usr/local .. $ make –j4 $ make installOpenCV is now fully installed.

Download Source Code and Install Dependencies

The open-source version of Intel® OpenVINO™ toolkit is available through GitHub. The repository folder is titled dldt, for Deep Learning Development Toolkit.

$ git clone https://github.com/opencv/dldt.gitThe repository also has submodules that must be fetched:

$ cd ~/dldt/inference-engine $ git submodule init $ git submodule update –-recursiveIntel® OpenVINO™ toolkit has a number of build dependencies. The install_dependencies.sh script fetches these for you. There must be some changes made to the script to run properly on ARM* platforms. If any issues arise when trying to run the script, then you must install each dependency, individually. For images that ship with a non-Bash POSIX-Compliant shell, this script (as of 2019 R1.1) includes the use of the function keyword and a set of double brackets which do not work for non-Bash shells. Using your favorite text editor, make the following changes.

Original Line 8 :

function yes_or_no {

Line 8 Edit:

yes_or_no() {

Original Line 23:

if [[ -f /etc/lsb-release ]]; then

Line 23 Edit:

if [ -f /etc/lsb-release ]; thenThe script also tries to install two packages that are not needed for ARM: gcc-multilib and gPlusPlus-multilib. They should be removed from the script, or all other packages will need to be installed independently.

Run the script to install:

$ sh ./install_dependencies.shIf the script finished successfully, you are ready to build the toolkit. If something has failed at this point, make sure that you install any listed dependencies and try again.

Building

The first step, for beginning the build, is telling the system the location of the installation of OpenCV. Use the following command:

$ export OpenCV_DIR=/usr/local/opencv4The toolkit uses a CMake building system to guide and simplify this building process. To build both the inference engine and the MYRIAD plugin for Intel® NCS 2, use the following commands:

$ cd ~/dldt/inference-engine

$ mkdir build && cd build

$ 6cmake -DCMAKE_BUILD_TYPE=Release \

-DENABLE_MKL_DNN=OFF \

-DENABLE_CLDNN=OFF \

-DENABLE_GNA=OFF \

-DENABLE_SSE42=OFF \

-DTHREADING=SEQ \

..

$ make

If the make command fails because of an issue with an OpenCV library, make sure that you’ve told the system the location of your installation of OpenCV. If the build completes at this point, Intel® OpenVINO™ toolkit is ready to run. The builds are placed in:

/inference-engine/bin/armv7/Release/

Verifying Installation

After successfully completing the inference engine build, you should verify that everything is set up correctly. To verify that the toolkit and Intel® NCS 2 works on your device, complete the following steps:

- Run the sample program benchmark_app to confirm that all libraries load correctly

- Download a trained model

- Select an input for the neural network

- Configure the Intel® NCS 2 Linux* USB driver

- Run benchmark_app with selected model and input.

Sample Programs: benchmark_appThe Intel® OpenVINO™ toolkit includes some sample programs that utilize the inference engine and Intel® NCS 2. One of the programs is benchmark_app, a tool for estimating deep learning inference performance. It can be found in:

~/dldt/inference-engine/bin/intel64/Release6Run the following command in the folder to test benchmark_app:

$ ./benchmark_app –hIt should print a help dialog, describing the available options for the program.

Downloading a Model

The program needs a model to pass into the input. Models for Intel® OpenVINO™ toolkit in IR format can be obtained by:

- Using the Model Optimizer to convert an existing model from one of the supported frameworks into IR format for the Inference Engine

- Using the Model Downloader tool to download a file from the Open Model Zoo

- Download the IR files directly from download.01.org

For our purposes, downloading the files directly is easiest. Use the following commands to grab an age and gender recognition model:

$ cd ~ $ mkdir models $ cd models $ wget https://download.01.org/opencv/2019/open_model_zoo/R1/models_bin/age-gender-recognition-retail-0013/FP16/age-gender-recognition-retail-0013.xml $ wget https://download.01.org/opencv/2019/open_model_zoo/R1/models_bin/age-gender-recognition-retail-0013/FP16/age-gender-recognition-retail-0013.binThe Intel® NCS 2 requires models that are optimized for the 16-bit floating point format known as FP16. Your model, if it differs from the example, may require conversion using the Model Optimizer to FP16.

Input for the Neural Network

The last required item is input for the neural network. For the model we have downloaded, you need a 62x62 image with 3 channels of color. This article includes an archive that contains an image that you can use, and it is used in the example below. Copy the archive to a USB Storage Device, connect the device to your board, and use the following commands to mount the drive and copy its contents to a folder called OpenVINO in your home directory:

$ lsblkUse the lsblk command to list the available block devices, and make a note of your connected USB drive. Use its name in place of sdX in the next command:

$ mkdir /media/usb $ mount /dev/sdX /media/usb $ mkdir ~/OpenVINO $ cp /media/archive_openvino.tar.gz ~/OpenVINO $ tar xvzf ~/OpenVINO/archive_openvino.tar.gzThe OpenVINO folder should now contain two images, a text file, and a folder named squeezenet. Note that the name of the archive may differ – it should match what you have downloaded from this article.

Configure the Intel® NCS 2 Linux* USB Driver

Some udev rules need to be added to allow the system to recognize Intel® NCS 2 USB devices. Inside the attached tar.gz file there is a file called 97-myriad-usbboot.rules_.txt. It should be downloaded to the user’s home directory. Follow the commands below to add the rules to your device:

If the current user is not a member of the users group then run the following command and reboot your device:

$ sudo usermod –a –G users “$(whoami)”While logged in as a user in the users group:

$ cd ~ $ cp 97-myriad-usbboot.rules_.txt /etc/udev/rules.d/97-myriad-usbboot.rules $ udevadm control --reload-rules $ udevadm trigger $ ldconfigThe USB driver should be installed correctly now. If the Intel® NCS 2 is not detected when running demos, restart your device and try again.

Running benchmark_app

When the model is downloaded, an input image is available, and the Intel® NCS 2 is plugged into a USB port, use the following commands to run the benchmark_app:

$ cd ~/dldt/inference-engine/bin/intel64/Release $ ./benchmark_app –I ~/president_reagan-62x62.png –m \ ~/models/age-gender-recognition-retail-0013.xml $ –pp ./lib –api async –d MYRIADThis will run the application with the selected options. The –d flag tells the program which device to use for inferencing – MYRIAD activates the MYRAID plugin, utilizing the Intel® NCS 2. After the command successfully executes the terminal will display statistics for inferencing. If the application ran successfully on your Intel® NCS 2, then Intel® OpenVINO™ toolkit and Intel® NCS 2 are set up correctly for use on your device.

Inferencing at the Edge

Now that you have confirmed that your ARMv7 is setup and working with Intel® NCS 2, you can start building and deploying your AI applications or use one of the prebuilt sample applications to test your use-case. Next, we will try to do a simple image classification using SqueezeNetv1.1 and an image downloaded to the board. To simplify things the attached archive contains both the image and the network. The SqueezeNetv1.1 network has already been converted to IR format for use by the Inference Engine.

The following command will take the cat.jpg image that was included in the archive, use the squeezenet1.1 network model, load the model with the MYRIAD plugin into the connected Intel® NCS 2, and infer the output. As before, the location of the sample application is:

/inference-engine/bin/armv7/Release/ $ ./classification_sample –i ~/OpenVINO/cat.jpg –m \ ~/OpenVINO/squeezenet/squeezenet1.1.xml –d MYRIADThe program will output a list of the top 10 results of the inferencing and an average of the image throughput.

If you have come this far, then your device is setup, verified, and ready to begin prototyping and deploying your own AI applications using the power of Intel® OpenVINO™ toolkit.

For more complete information about compiler optimizations, see our Optimization Notice at: https://intel.ly/33FbQUU.

Reference

https://software.intel.com/en-us/articles/ARM64-sbc-and-NCS2

Be the first to comment