Over the years, I have upgraded my home storage several times. Like many, I started with a consumer-grade NAS. My first was a Netgear ReadyNAS, then several QNAP devices. About a two years ago, I got tired of the limited CPU and memory of QNAP and devices like it, so I built my own using a Supermicro XEON D, Proxmox, and FreeNAS. It was great, but adding more drives was a pain. Migrating between ZRAID levels was basically impossible without lots of extra disks.

The fiasco that was FreeNAS 10 was the final straw. I wanted to be able to add disks in smaller quantities and I wanted better partial failure modes, kind of like unRAID, while remaining able to scale to as many disks as I wanted. I also wanted to avoid any single points of failure, such as a home bus adaptor, motherboard, or power supply.

I had been experimenting with GlusterFS and Ceph using roughly forty small virtual machines (VM) to simulate various configurations and failure modes such as power loss, failed disk, corrupt files, and so forth. In the end, GlusterFS was the best at protecting my data because even if GlusterFS was a complete loss, my data was mostly recoverable due to being stored on a plain ext4 filesystem on my nodes. Ceph did a great job too, but it was rather brittle (though recoverable) and difficult to configure.

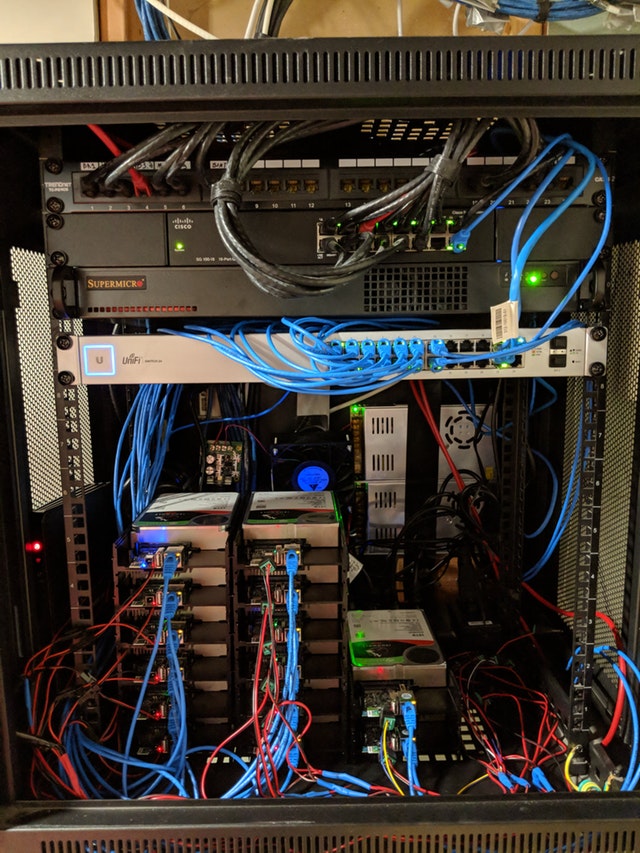

Enter the ODROID-HC2. With 8 cores, 2 GB of RAM, Gbit ethernet, and a SATA port it offers a great base for massively distributed applications. I grabbed four ODROIDs and started retesting GlusterFS. After proving out my idea, I ordered another 16 nodes and got to work migrating my existing array.

In a speed test, I can sustain writes at 8 GBPS and reads at 15 GBPS over the network when operations are sufficiently distributed over the filesystem. Single file reads are capped at the performance of 1 node, so ~910 Mbit read/write.

In terms of power consumption, with moderate CPU load and a high disk load (rebalancing the array), running a pfSense box, 3 switches, 2 Unifi Access Points, a Verizon Fios modem, and several VMs on the XEON-D host, the entire setup uses about 250 watts. Where I live, in New Jersey, that works out to about $350 a year in electricity. I'm writing this article because I couldn't find much information about using the ODROID-HC2 at any meaningful scale.

Parts list

- ODROID-HC2 https://www.hardkernel.com/main/products/prdt_info.php?g_code=G151505170472

- 32GB MicroSD card. You can get by with just 8GB but the savings are negligible. https://www.amazon.com/gp/product/B06XWN9Q99/

- Slim cat6 ethernet cables https://www.amazon.com/gp/product/B00BIPI9XQ/

- 200CFM 12V 120mm (5”) fan https://www.amazon.com/gp/product/B07C6HR3PP/

- 12V PWM speed controller, to throttle the fan. https://www.amazon.com/gp/product/B00RXKNT5S/

- 5.5mm x 2.1mm (0.21” x 0.08”) barrel connectors for powering the ODROIDs. https://www.amazon.com/gp/product/B01N38H40P/

- 12V 30A power supply. Can power 12 ODROIDs with 3.5” HDD without staggered spin up. https://www.amazon.com/gp/product/B00D7CWSCG/

- 24 power gigabit managed switch from Unifi https://www.amazon.com/gp/product/B01LZBLO0U/

The crazy thing is that there isn't much configuration for GlusterFS. That’s what I love about it. It takes literally three commands to get GlusterFS up and running after you get the OS installed and disks formatted. I'll probably post a write up on my github at some point in the next few weeks. First, I want to test out Presto ( https://prestodb.io/), a distributed SQL engine, on these puppies before doing the write up.

$ sudo apt-get install glusterfs-server glusterfs-client $ sudo gluster peer probe gfs01.localdomain ... gfs20.localdomain $ sudo gluster volume create gvol0 replicate 2 transport tcp gfs01.localdomain:/mnt/gfs/brick/gvol1 ... gfs20.localdomain:/mnt/gfs/brick/gvol1 $ sudo cluster volume start gvol0For comments, questions, and suggestions, please visit the original article at https://www.reddit.com/r/DataHoarder/comments/8ocjxz/200tb_glusterfs_odroid_hc2_build/.

Be the first to comment